Post by : Abhinav Rana

Huawei Strengthens AI Safety With DeepSeek-R1-Safe

Huawei has unveiled a new safety-focused version of its open-source language model, designed to significantly tighten control over sensitive and harmful content. The development highlights a growing trend in the artificial intelligence sector: balancing powerful AI capabilities with stricter guardrails that ensure compliance with cultural and political standards.

The new model, called DeepSeek-R1-Safe, is a modified version of the widely used DeepSeek-R1. Instead of reinventing the wheel, Huawei’s engineers focused on refining the existing system by embedding safety parameters and specialized training data. This process allows the AI to maintain most of its original strengths—such as language fluency and reasoning—while reducing its likelihood of generating problematic or politically sensitive content.

Huawei’s approach reflects a clear strategy: optimize existing models for stricter environments, rather than building smaller, limited tools. The company’s internal evaluations suggest the model has achieved near-total effectiveness in filtering out unwanted outputs under standard testing conditions.

Hardware, Data, and Precision

Behind this achievement lies a sophisticated training process. Huawei utilized its in-house Ascend AI chips, deploying thousands of processors in large-scale clusters. These machines worked in tandem to retrain the model with curated datasets aimed at reinforcing safety. The training emphasized supervised learning methods that teach the model when to decline a request, when to redirect the conversation, and when to generate safe, neutral outputs.

The project was not carried out in isolation. A major Chinese university collaborated with Huawei to fine-tune the model and evaluate its performance, combining academic insight with industrial-scale engineering. This partnership shows how the frontier of AI safety is being shaped by close cooperation between technology companies and research institutions.

Despite impressive results, Huawei acknowledges that challenges remain. While the model performed exceptionally well in straightforward tests, its resilience dropped when subjected to adversarial scenarios, situations where users deliberately disguise prompts to trick the AI into bypassing its safety filters. This highlights the ongoing “cat-and-mouse” nature of AI safety: as developers tighten controls, users and bad actors devise new ways to evade them.

Global and GCC Implications

The launch of DeepSeek-R1-Safe holds implications far beyond China. Around the world, governments and regulators are demanding more responsible AI systems that align with local cultural, legal, and ethical standards. This pressure is reshaping how companies deploy advanced technologies, creating a market for AI models that are not only powerful but also compliant.

For the GCC region, where AI adoption is accelerating, Huawei’s latest model underscores the importance of aligning technology with social values. Gulf states such as the UAE and Saudi Arabia have already invested heavily in AI innovation, while also emphasizing the need for ethical and controlled applications. Models like DeepSeek-R1-Safe provide a blueprint for how global firms might tailor advanced AI tools for regional use.

Enterprises in the GCC stand to benefit from these advances by incorporating AI assistants and chatbots into customer service, education, and government services are confident that the technology has built-in safeguards against misuse. However, there are lessons too: no safety system is foolproof. Local regulators and businesses will need to remain vigilant, updating standards, testing procedures, and compliance frameworks to ensure continued reliability.

Globally, Huawei’s move reflects a larger conversation about fragmentation in AI ecosystems. While open-source models often aim for universal applicability, safety-tuned versions increasingly vary by region. The same base model may act very differently in Europe, the Middle East, or Asia, depending on the safety layers applied. This creates operational complexity for multinational firms but may also ensure smoother integration within different cultural and regulatory contexts.

The debut of DeepSeek-R1-Safe demonstrates that safety in AI is not a one-time fix but a continuous process. Even with advanced training and massive computing resources, no system can guarantee absolute protection against harmful or sensitive content. What Huawei has achieved is an incremental but vital step: making it harder for the model to generate risky responses while keeping its core intelligence intact.

For businesses and policymakers in the GCC, the message is clear. AI systems must be monitored, updated, and retested regularly. Vendors should be required to provide transparent reporting on model performance, safety metrics, and incident handling. As more sectors from finance to healthcare integrate AI tools, the cost of an unsafe or misaligned model grows exponentially.

From a competitive perspective, the global race is no longer just about building the biggest or smartest AI model. It is increasingly about building the safest usable model, one that can serve different societies without creating unnecessary risk. For Huawei, DeepSeek-R1-Safe is not just a technological milestone but also a commercial strategy, ensuring its products remain viable in a world where responsible AI is becoming a non-negotiable demand.

The GCC, with its fast-growing digital economy and strong emphasis on regulation, could emerge as one of the most important testing grounds for these developments. If regional authorities adopt strong frameworks for AI safety, they may attract global firms eager to demonstrate compliance while tapping into a tech-savvy market.

Huawei’s DeepSeek-R1-Safe is more than just another AI model release, it is a clear signpost of where the industry is heading. The future of artificial intelligence will be defined not only by how powerful models become but also by how safe, adaptable, and compliant they are across different regions. For GCC countries and their citizens, this moment represents both an opportunity and a challenge: to harness the benefits of AI while ensuring its growth remains anchored in cultural, ethical, and social responsibility.

Final Solar Eclipse Of 2025 To Occur On September 21

The final solar eclipse of 2025 on September 21 will be partial, visible in the Southern Hemisphere

Suryakumar Yadav Focuses on Team, Avoids Pakistan Talks Ahead

India captain Suryakumar Yadav avoids Pakistan mentions, urges focus on team, and asks fans for supp

India's Asia Cup Win Over Oman Highlights Team's Strengths & Depth

India beat Oman by 21 runs in Asia Cup, with key contributions from Hardik Pandya, Abhishek Sharma,

Arshdeep Singh Becomes First Indian Fast Bowler to 100 T20I Wickets

Arshdeep Singh becomes first Indian fast bowler to reach 100 T20I wickets, while Hardik Pandya equal

Oracle in Talks With Meta for $20 Billion Cloud Computing Deal

Oracle is negotiating a $20 billion cloud computing deal with Meta to support AI model training, mar

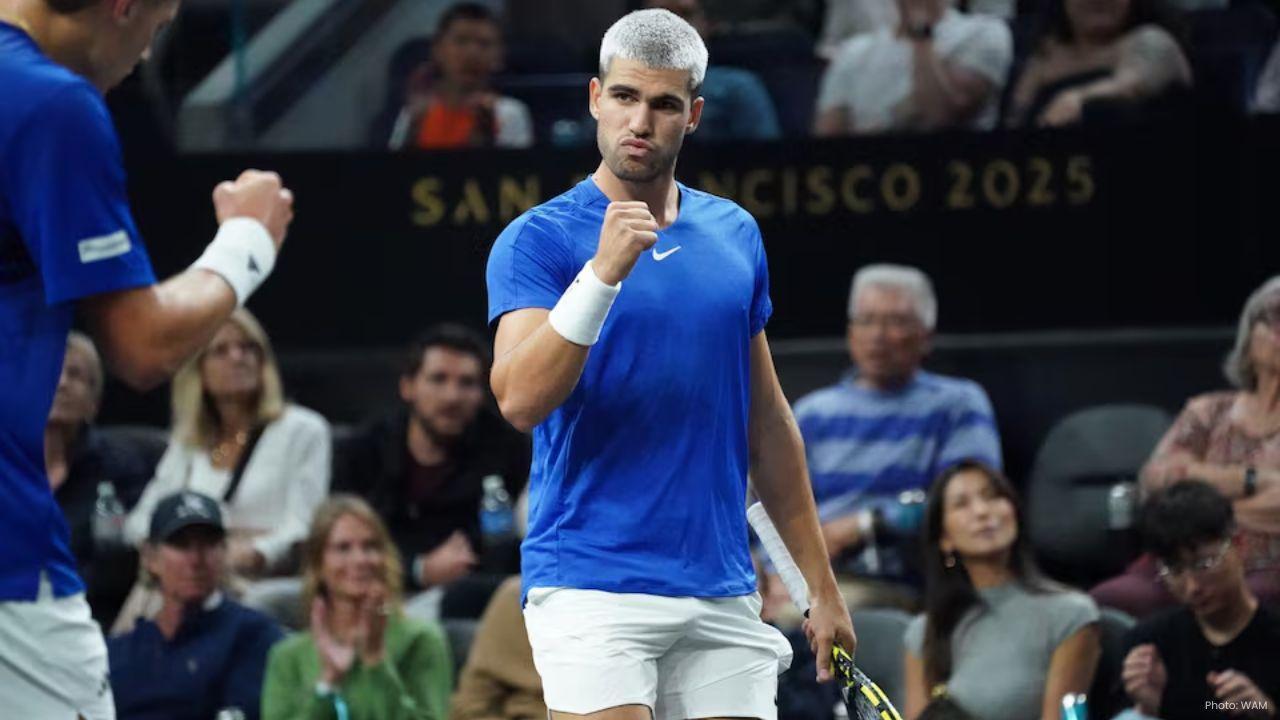

Alcaraz and Mensik Lead Team Europe to Doubles Win at Laver Cup

Carlos Alcaraz and Jakub Mensik help Team Europe beat Team World in doubles at the Laver Cup. Casper